DeepSeek R2: The AI Underdog Set to Flip the Script on Silicon Valley

By the time you finish this article, the cost of running your favourite AI chatbot might already be obsolete.

In the high-stakes world of artificial intelligence, where tech behemoths like OpenAI, Google, and Anthropic battle it out in trillion-dollar arenas, an unlikely disruptor from Hangzhou is rewriting the rules of the game. DeepSeek, a relatively quiet Chinese AI startup, has been making shockwaves in the industry since the surprise debut of its R1 model earlier this year. Now, with the impending launch of DeepSeek R2, we may be witnessing the dawn of a new AI economy—one that doesn’t cost an arm, a leg, and a few GPUs to operate.

Let’s unpack the tech, the gossip, and the global ramifications behind what might be the most cost-efficient large language model (LLM) the world has ever seen.

What is DeepSeek R2? A Snapshot of the Specs

According to industry insiders, tech bloggers, and a few now-deleted posts from Chinese social platforms, DeepSeek R2 isn’t just an iteration of its predecessor—it’s a quantum leap.

Key Specs of DeepSeek R2:

- 1.2 trillion-parameter model with a hybrid Mixture-of-Experts (MoE) design

- 78 billion active parameters per token

- Trained on 5.2 petabytes of data

- 97.3% cheaper to run than GPT-4

- Usage costs: $0.07/M input tokens, $0.27/M output tokens

In comparison, GPT-4 Turbo, Google’s Gemini 2.0, and Meta’s LLaMA 3.1 are practically luxury brands at this point. DeepSeek isn’t just competing—it’s redefining what competition looks like.

R1 already gained attention when it momentarily overtook ChatGPT on the App Store. If R2 even matches the buzz—let alone the benchmarks—we could see a global AI adoption curve bent overnight.

Also see GPT4.5 Ultimate Guide

Hybrid MoE & MLA: Smarter, Faster, Cheaper

What powers this cost-effectiveness? DeepSeek has taken a page from the MoE playbook and rewritten it. The hybrid Mixture-of-Experts architecture allows only specific parts of the model to activate for a given task.

Think of it as a brain that only uses the regions it needs, rather than firing up the whole thing every time someone asks a question.

Combine that with Multihead Latent Attention (MLA), which enables simultaneous processing of various prompt aspects, and you’ve got a system that’s faster, leaner, and eerily clever.

The MoE setup avoids redundancy. Most traditional LLMs activate their entire neural network for every request—like boiling the kettle every time you want a cup of water. DeepSeek flicks on just the right neural “switches,” saving energy, cost, and time.

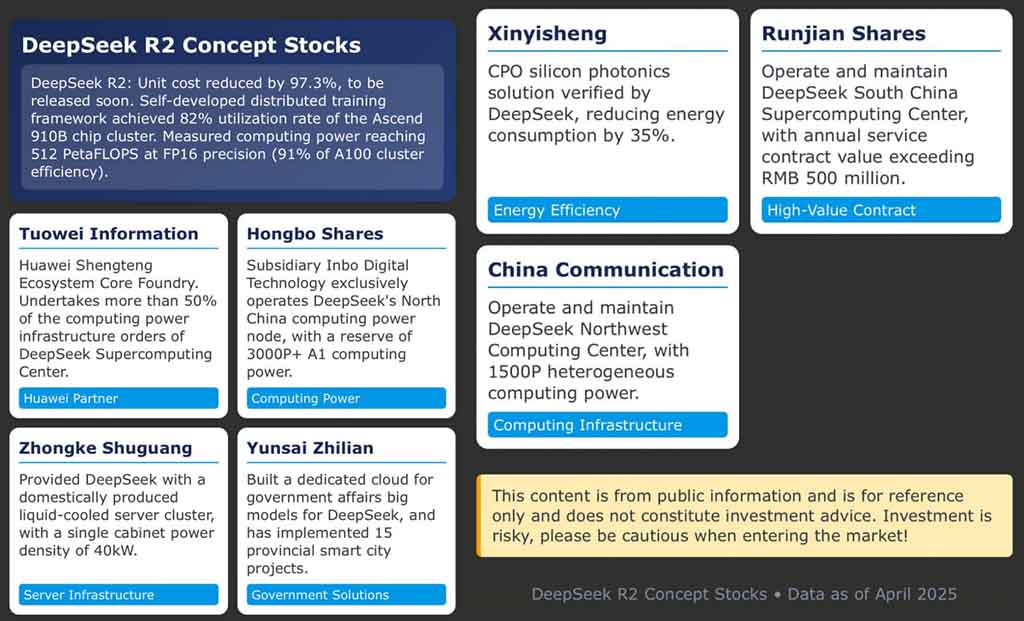

Huawei Chips: The Silicon Shake-Up

The most geopolitically significant piece? R2 has reportedly been trained entirely on Huawei’s Ascend 910B chips—a massive statement in the context of US-China tech tensions.

Hardware Details:

- 82% utilization across Huawei Ascend 910B chips

- Comparable to 91% efficiency vs. Nvidia A100-based clusters

- Reached 512 PetaFLOPS (FP16 precision)

Translation: DeepSeek is flexing not just algorithmic innovation, but hardware sovereignty.

This shift away from US-based supply chains isn’t just a technical feat—it’s a strategic manoeuvre that challenges Nvidia’s dominance and points to a parallel AI ecosystem emerging in China.

Work Culture at DeepSeek: The Human Advantage

Amidst the focus on chips and code, one surprising differentiator may be DeepSeek’s work culture. In contrast to China’s notorious “996” work schedule, DeepSeek’s founder Liang Wenfeng promotes a culture of autonomy and trust.

“Liang gave us control and treated us as experts,” said one young researcher.

This collaborative culture could be the secret behind their rapid innovation cycles, and it’s a feature—not a bug—in DeepSeek’s larger story. In an industry

starved for talent, this could attract top minds tired of burnout.

From Rumour Mill to Real Deal?

There’s no shortage of sceptics. The DeepSeek R2 specs are largely based on leaks and deleted posts from platforms like Jiuyangongshe. Many have yet to be officially confirmed.

There are even allegations of data misuse, such as sending information to ByteDance servers—claims that remain unverified. Still, interest continues to grow. Even Menlo Ventures‘ Deedy Das shared these figures, triggering over 600,000 views on X.

Given DeepSeek’s track record with R1 and open-source releases like V3, the company has already proven it can walk the walk, not just talk the hype.

Implications for the Global AI Market

If R2 delivers even half of what’s promised, it could disrupt global pricing, democratize access to AI, and shake Big Tech out of its comfort zone.

Global Impact Possibilities:

- Affordable APIs for small businesses and local governments

- New opportunities for AI adoption in developing markets

- Open-source ecosystem growth as a counter to proprietary models

“DeepSeek’s success will likely push companies worldwide to accelerate their efforts, breaking the stranglehold of the few dominant players,”

Said Vijayasimha Alilughatta of Zensar

(I really have to concentrate on that spelling – be impressed – or not)

Just as cloud computing democratised tech infrastructure in the 2010s, DeepSeek R2 could democratise AI capabilities in the 2020s.

The AI Arms Race Accelerates

With GPT-5 and next-gen Gemini models in the pipeline, competition is fierce. But DeepSeek’s focus on efficiency, independence, and open access makes it an unpredictable challenger.

This isn’t just an East-vs-West story. It’s about who can deliver intelligence affordably to the world. And DeepSeek is positioning itself to do just that.

Whether they IPO or stay rogue, their influence is undeniable.

Final Word: The New AI Economy Is Here

DeepSeek R2 isn’t just another model—it’s a statement. One that signals the decentralisation of AI innovation and challenges monopolistic pricing structures.

Whether it soars or stumbles, the message is clear: The world is ready for a new kind of AI player. One that’s affordable, open, and built for everyone.

If that sounds like a revolution, it’s because it is.

Check out how DeepSeek did in a recent Gen-AI , AI vs AI multitude of tests